This is a short story about how SMOC.AI switched to CloudFlare to build a personalized automated sales agent bot with low latency and high scalability.

SMOC.AI’s sales agent bot plays an important role in our customers’ lead acquisition strategy. Every month it conducts hundreds of thousands of conversations with visitors to our customers’ web sites.

The bot asks a series of questions to the visitor as a quiz. The visitor then chooses between multiple answers by pressing buttons. The next question the bot asks depends on previous answers. At the end of the conversation the visitor is often asked to leave their contact information for a follow-up personalized offer.

These conversations increase lead generation rates for our customers by 200-500%.

Our customers love it, and happy customers always ask for more. Some of our most popular feature requests are:

- Can you tailor the conversation to every visitor to improve lead generation rates even more?

- We want to reach audiences outside the web (Discord / SnapChat etc). Can you make it work there?

-

We’re in Asia - why does it take so long to load the bot?

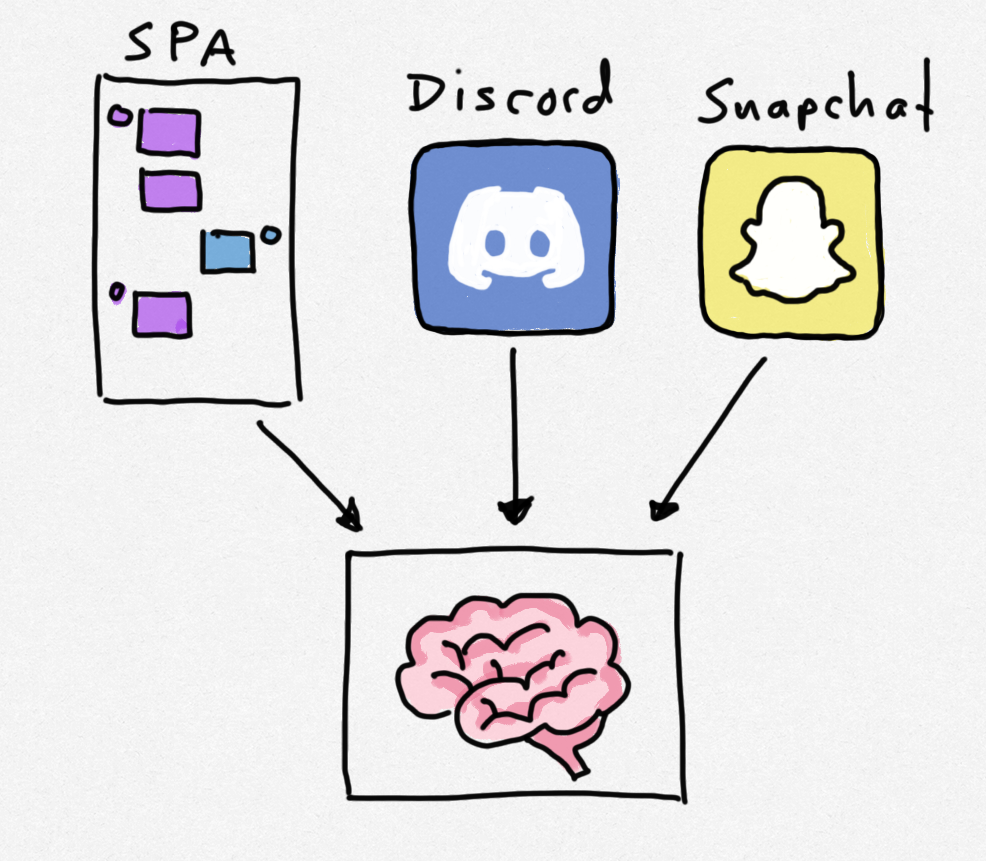

- The Rails Webapp served HTML, CSS, SPA code and a flow JSON to the browser

- The SPA talked to an API server over HTTP

- The API server received answers and wrote them to Postgres

- The Postgres database stored all the answers

The SPA would load a directed graph of all of possible bot messages (a flow) into memory at the start of a conversation. Each time a visitor pressed a button, the SPA would follow an edge in the graph to the next question and display that on the screen.

All the decisions about what the bot should say next was done in the SPA running in the browser.

The bot’s (pea) brain was literally in the browser.

In order to provide a personalized experience to each visitor, we decided to build a machine learning model to decide the best edge to follow next. We had to make the brain smarter and bigger. This would be a lot easier to pull off if we moved the conversation logic from the browser to the server.

With the conversation logic running on a server we would need to push bot messages to the client devices. On the web, this would be done over a WebSocket.

We’d need to handle at least 10k concurrent WebSockets on a regular basis, and over 100k whenever our customers run big advertising campaigns causing spikes.

This kind of concurrent I/O would not work well on the dedicated EC2 servers we had. That would be one hot brain! Or maybe 10, but still hot. We needed an architecture that could scale quicker, and at a lower cost.

We already knew that our current architecture could lead to write congestion in the Postgres database, so we were also looking to avoid that.

Our new architecture would have to handle massive loads, and it would also have to be available around the globe so that visitors don’t have to wait more than 100ms for the bot to load. In other words, it would have to live on the edge.

Since we were already using AWS, we first considered using lambdas. Lambdas are great, but they don’t support WebSocket connections unless you jump through hoops.

We decided to take a closer look at CloudFlare’s Durable Objects, and quickly realized this would be a perfect match for our needs. If you’re not familiar with Durable Objects, think of them like a long-running lambdas with state and a dedicated WebSocket.

It took two engineers a few months to rewrite the bot engine for this architecture. As part of this work we also adopted Cloudflare KV to store conversation data instead of Postgres.

Whenever a new visitor starts a conversation, Cloudflare immediately spins up a new Durable Object to handle the conversation. We don't need to worry about scaling anymore.

So far the Cloudflare stack has been great to work with. It doesn't just scale extremely well, it also has excellent Developer Experience (DX) so we can spend more time building software and less time managing infrastructure.

We have a suite of hundreds of automated tests that run in about 2 seconds, so iterating and experimenting is extremely quick.

With this architecture in place we're well positioned to start building our new machine learning model which we'll hook into all these little brains. It will use bayesian inference to select edges in our flow graphs. I'll tell you more about that in a future blog post.

SMOC.AI is hiring. We're looking for full-stack developers with ML experience. If you're interested in learning more, send an email to aslak@smoc.ai.